Last Updated on 22 February 2020 by Eric Bretscher

This article is part of a series dealing with building best-in-class lithium battery systems from bare cells, primarily for marine use, but a lot of this material finds relevance for low-voltage off-grid systems as well.

Integrating a lithium battery bank on board a vessel introduces a few additional constraints and challenges that don’t exist with lead-acid batteries. Let’s consider two key statements:

A key difference between a lead-acid and a lithium battery is that the former can be damaged safely

While this may come across as provocative, it is nevertheless very true. Overcharging or flattening of a lead-acid battery is detrimental to its life. That’s about it. A lithium battery quickly gets totally destroyed and becomes a fire risk in the same circumstances.

Another main difference between a lead-acid and a lithium electrical system is that, in the second instance, the battery may become entirely disconnected from the installation, which can result in considerable damage

Protecting a lithium battery from damage may ultimately require isolating it from the system following a dangerous adverse event. A charge regulation failure or a complete discharge, for example, are such events. Unfortunately, there tend to be charging sources in marine DC electrical systems that are typically not designed to operate or cope without a battery in circuit in most instances: disconnecting the battery has a strong potential for causing malfunctions and sometimes considerable and very expensive collateral damage.

The battery is the base load in the charging system and is required to prevent the voltage from spiking up, sometimes considerably; many charge regulators cannot function or regulate properly without it.

In this article, we will discuss some avenues and options to design systems taking care of these aspects.

Disclaimer

A good understanding of DC electrical systems is needed to build and commission a lithium battery installation. This article is aimed at guiding the process, but it is not a simple blind recipe for anyone to follow.

The information provided here is hopefully thorough and extensive. It reflects the knowledge I have accumulated building some of these systems. There is no guarantee that it will not change or grow over time. It is certainly not sufficient or intended to turn a novice into an electrical engineer either. You are welcome to use it to build a system, but at your own risk and responsibility.

Basic Electrical System Design for Lithium

Due to the above considerations, the electrical system on board needs to conform with a model that allows battery disconnection without creating additional problems. In nearly all instances, alterations need to be made to the existing installation before a lithium battery bank can be considered. This assessment should take place before anything else.

There are absolutely no issues with electrical consumers on board; the voltage out of a lithium battery bank not only is within the range of what is experienced with lead-acid systems, but also exhibits less variation. A typical lead-acid system operates between 11.5V and 14.4V (less for gel cells). While the practical voltage range of a lithium system extends from 12.0V to 14.2V at the very most, the bulk of the cycling takes place between 13.0V and 13.4V only.

The challenge resides with charging sources and the risk of seeing them being disconnected, including under load, or even worse, directly feeding into the boat’s electrical system without a battery present.

Dual DC Bus Systems

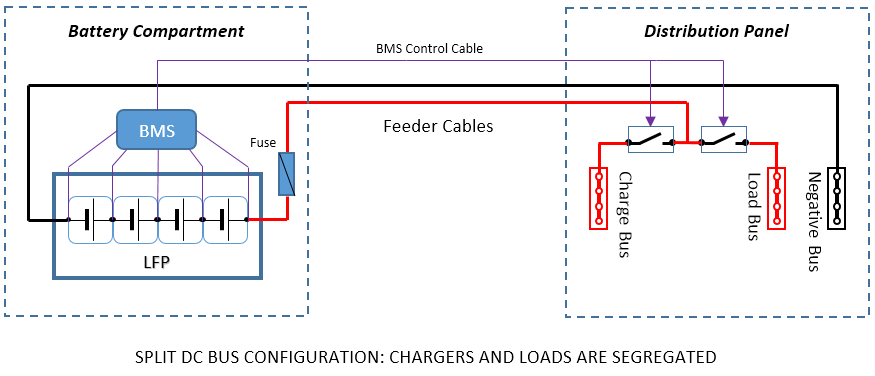

Dual DC bus systems represent the optimal solution in reliability, resilience and functionality with lithium batteries:

- Power on board is not lost if an issue is detected with a cell reading excessive voltage. This can happen if a charger regulates poorly, cell imbalance is developing, or there is a system setup issue.

- A low-voltage disconnect doesn’t compromise recharging and the system has a chance to recover by itself.

This makes the dual DC bus topology very desirable on board marine vessels, but it also comes with higher engineering requirements.

The conversion of an existing installation to use a lithium battery bank with a dual bus system first entails segregating charging sources from electrical loads. Skipping this step is not really possible unless another (lead-acid) battery remains in circuit after the lithium bank is disconnected.

Twin battery disconnectors are at the heart of all dual DC bus lithium systems. Those are top-quality Tyco Electronics latching relays that offer zero standby consumption and a 260A continuous current capacity. The battery bank connects on the middle post, while the load and charge DC buses tie on the sides.

Creating a separate charge bus and load bus normally requires some rework of the heavy current cabling. Choosing a judicious location for the disconnector relays goes a long way towards minimising the impact of the changes. Electrical distribution is normally either carried out close to the battery compartment, or a feeder cable runs from the batteries to a distribution panel where the main positive and negative busbars are located.

Occasionally, marine electrical systems conform to another topology known as a Rat’s Nest. Those need to be pulled out before any further considerations

In essence, the positive busbar must be duplicated to separate charging sources from loads; the negative busbar normally stays as it is. The battery disconnectors are inserted close to this point to tie the bank into the system and any feeder cables normally remain unaffected.

The split DC bus configuration is the gold standard in terms of reliability and functionality for lithium battery installations. It is the preferred pathway for engineering elaborate lithium-only systems and for critical applications as it allows for specific and optimal responses to both excessive charge and discharge situations. Achieving this result requires capable equipment and good system design.

Controlling a dual DC bus system requires a BMS offering suitable outputs: this is not commonly found on solutions intended for electric vehicle (EV) conversions, which tend to rely on a single “disconnect all” contactor.

Attempting to build a dual bus system with an inadequate BMS all too often results in installations where both buses can (and therefore will, sooner or later) end up connected with no battery to charge; at this point, an unregulated charging voltage usually gets fed straight through into the boat’s electrical system, leading to a memorably expensive wholesale fry up. The ultimate in terms of the depth of thoughts afforded by the incident is when it happens at sea.

Key Challenges with Dual DC Bus Lithium Systems

It is fair to say that, today, a majority of DIY dual DC bus lithium systems contain critical design flaws their owners are often unaware of, or have decided to ignore because they could not solve them properly. This is often related to the use of some junk-grade or unsuitable BMS solution, carefully selected for no other reason that others have used it, coupled with a lack of design analysis.

A system is not good because it works, it is only good if it can’t malfunction or fail under any unusual circumstances

Dual DC bus systems come with two challenges associated with the potential disconnection under load of the charge bus or the load bus. A charge bus disconnect event is typically associated with a high-voltage event, while the load bus normally drops out due to an under-voltage situation at the battery.

Issues Associated with a Charge Bus Disconnect and Possible Solutions

In case of a high-voltage event causing a charge bus disconnection, charging sources can end up:

- Disconnected under load, which can destroy some charging devices by causing their output voltage to spike; and

- Subsequently linked together with no battery to charge, which can also result in damage due to excessive voltages for some devices. Many charge controllers require the presence of a large capacitive load (the battery) to operate correctly.

These two situations need to be analysed carefully and mitigated if required.

Typical examples:

- A simple PWM solar charge controller switches the panels on and off rapidly to keep the battery voltage at a setpoint. The voltage varies very little because the battery absorbs the current while the panels are turned on. If the battery is removed, the open-circuit voltage of the panels is directly transferred to the output and injected into the charge bus: this means about 22V at times with the standard 36-cell panels used in 12V nominal installations.

While this doesn’t really matter in itself and the controller can always take it, if other charging devices are also connected to the charge bus, they suddenly get exposed to that voltage that may prove excessive. - Many simple wind generators can be disconnected under load without getting damaged (as long as they don’t reach excessive speeds afterwards), but a very significant voltage spike can result, high enough to damage other electronic charge controllers that would happen to share the charge bus.

High voltages also keep being produced at the output afterwards if the unit spins up. This is generally completely unacceptable. - Some modern wind generators can’t be disconnected at all under load, or their charge controller will be destroyed by the resulting voltage surge.

- Some, but not all, MPPT charge controllers can fail from an output voltage spike if disconnected under (heavy) load. Good quality units use buck stages implementing cycle-by-cycle limiting and can in fact regulate their output even under no load.

- Alternators nearly always fail with considerable damage to the rectifiers and regulator if disconnected under load. Interrupting the current causes a collapse of the magnetic field in the stator, which induces an intense surge, sometimes in excess of 100V.

The best and the simplest avenue, by far, would be using charging equipment that can be disconnected under load without issues and won’t output wildly unregulated voltages if there is no battery to charge. Unfortunately, this is not always practical, like in the case of alternators, or economics can favour trying to keep pre-existing gear: this is not always feasible, for a number of reasons, and can considerably increase the cost of a system conversion from lead-acid to lithium-ion.

Typical solutions to address these problems fall into three categories.

Disabling the Device in Advance

This involves turning off the charging device before it gets disconnected:

- Alternators can be disabled by interrupting the field circuit with a relay.

- Shore power chargers can be disconnected on the mains side.

- Wind generators often need to be diverted into a dump load or a short-circuit, which stops them.

- If concerns exist with solar systems, disconnecting the panels before the charge controller is an effective measure and normally always safe to do.

- Many externally-regulated wind generators are best disconnected (and short-circuited) before the charge controller as well.

In all cases, powering a relay or other disconnection device to disable a charging source is completely unacceptable. These systems must be fail-safe and not charge by default in the absence of control signal, so disabled charging sources can’t restart producing power after the battery has been disconnected and an additional layer of protection is created. This requires – for example – using relays with normally open (NO) contacts or bistable latching relays, so even a loss of control power can’t lead to a reconnection.

The best is often using fail-safe solid-state switching devices to minimise the current consumption while held on and maximise reliability.

In order to implement an advanced disconnection scheme, the BMS must support it and provide an adequate signal to act upon at least a fraction of a second before the DC charge bus gets isolated.

This can take the form of a “OK to charge” control signal and/or some kind of dedicated “charger enable” output, which would both get turned off long before a high-voltage (HV) protection event occurs.

Here again, junk-grade BMS products typically never offer such functionality and are therefore completely unsuitable to build such systems.

Individual Disconnection

If damage to other charge controllers is the main concern, disconnecting a device on its own is effective. This equates to giving it its own charge bus and disconnector. This can work very well for some unregulated wind generators, which are notorious for producing voltage surges and very high open-circuit voltages. Units featuring external charge controllers (in contrast with those equipped with built-in regulators) can be disabled by intervening upstream of the controller.

The drawback is the cost of an additional disconnector.

Absorbing/Deflecting the Surge

Another very effective option is ensuring that the current has somewhere to go following a disconnection: the output of a charge controller can be split over an isolator (diodes) and shared between the lead-acid starting battery and the lithium battery charge bus.

In this case, the presence of the lead-acid starting battery becomes essential to the safe operation of the system.

Not all charge controllers accept being wired this way however, because it effectively “hides” the battery voltage until charging begins. Some controllers draw on the battery to power themselves and operate in standby before starting to charge. Many wind generators fall into this category and simply refuse to operate when cabled this way.

More relevant information can be found further below under charge splitting, because the strategy can be, partially or wholly, applied to the charge bus of a dual bus system.

Issues Associated with a Load Bus Disconnect and Possible Solutions

Disconnecting the load bus presents no hazards at all as long as all loads connected are resistive and/or capacitive in nature. Loads falling outside this definition are inductive and therefore include electromagnetic devices like coils, motors and solenoids. The disconnection of a powered inductive load results in a reverse (i.e. negative) voltage spike (also known as back-EMF) produced by the collapsing magnetic field. The amount of energy released is proportional to the square of the intensity of the field, so the primary offenders are high-current devices like winches, windlasses or starter motors.

When the load bus is disconnected from the battery to stop further discharge, any energy surge released in the load circuit will potentiall reach all connected equipment on board from lights to electronics and these will be exposed to a brief, but possibly intense reverse-voltage pulse. A lot of marine electrical equipment is protected against reverse polarity connection and, up to a point, voltage surges, but the back-EMF from a large DC motor tripped under heavy load still has the potential to take out a lot of equipment on board.

Back-EMF Suppression

Suppression involves shorting out the spike at the source and it is very commonly implemented for small coils by the addition of a free-wheeling diode. A free-wheeling diode is wired to conduct from the negative towards the positive and therefore does nothing (blocks any current) in normal operation, but it clamps out the negative voltage spike to a value below 1V typically.

Suppression is best implemented as close as possible to the source by adding a diode across the terminals of the offending winding, but a limit exists to the amount of energy a diode can take in a pulse without getting destroyed. This energy is equal to W = 0.5 x L x I 2, where L is the inductance of the motor or coil and I is the current at the time of the disconnection and, as large motors are significantly inductive and the energy increases with the square of the current, this approach is only really practical for small loads like relay coils or a fridge compressor DC motor due to the cost of very large diodes.

Disabling the Device in Advance

Disabling the device while the battery is still in circuit is here again a sensible and highly effective solution. It is best implemented as a low-voltage disconnect of the control circuit (i.e. control solenoid etc), which is low-power and low-current and therefore doesn’t require any high-capacity equipment.

This preventative action also has the advantage of potentially avoiding a low-voltage disconnect under high load with a general loss of power on board. While new, fresh, lithium cells have very low internal resistance and the voltage doesn’t sag much even under heavy loads, it increases over time and older installations become more susceptible to experiencing low-voltage disconnects under heavy loads when the cells are at a low state of charge.

Care must be taken to ensure that that this early action will always precede the disconnection of the load bus and the best and most reliable way to achieve this is getting the BMS itself to supply this signal. This eliminate potential conflicts between the reaction time of an independent low-voltage disconnect device and the BMS dropping the load bus in the event of a sudden and significant voltage drop.

Voltage Sensing

As long as a power source only charges the lithium bank, the reference voltage can normally be obtained from the bank.

The alternative is getting it from the DC charge busbar, which is the same, but upstream of the feed line and disconnector. The benefit is that it keeps reflecting the charger output voltage after a disconnection and can prevent over-voltage on the charge bus; the drawback is that it ignores the losses in the feeder cable and disconnector relay.

Many charging devices fall back on regulating their own output in the absence of a signal at the voltage sensing input, but this usually needs to be tested on vase-by-case basis if the installation is going to rely on it for proper operation.

These two strategies can be mixed and matched as required by charging devices, but the analysis needs to be carried out.

If a charge splitting strategy is used, then the corresponding guidelines apply to the chargers featuring a split output.

Simplistic Alternatives to the Dual DC Bus Topology

Building and commissioning a dual DC bus system can be demanding. It requires a good understanding of the behaviour and capabilities of the equipment used on board and some kind of “what-if” analysis must be carried out to ensure that simple unusual events are not going to result in serious malfunctions.

For these reasons, there appears to be no shortage of dangerous and irresponsible advice to be found under the KISS moniker when it comes to building lithium battery banks and installations. Let’s just say that, provided the cells have first been balanced, it always “works” – until something suddenly goes very wrong. Badly engineered lithium battery systems are still causing enormous amounts of electrical damage on board vessels, which typically doesn’t get reported back. I do hear about those however, quite regularly.

System design doesn’t lend itself to browsing around and averaging; it needs to be consistent and robust

Here, we will try and explore a couple of actually valid avenues to “simplify” the construction of a lithium system without creating additional risks.

The simplest way of resolving the issue of the disappearance of the battery in the electrical system following a safety disconnect event is… ensuring that a battery remains afterwards.

Two examples of simplistic, but safe and functional, topologies are provided below. In each case, we deflect and negate the problems instead of eliminating them at the source. While these schemes can easily be implemented successfully, they remain workarounds with some drawbacks and limitations.

There is no simplification down to the point of just dropping some lithium battery cells in a battery box

Regardless of the system design retained, all the charging voltages still need to be adjusted in order to stay clear of over-voltage problems at cell level and due care still needs to be taken not to overcharge the lithium cells.

The new battery also needs to be protected just the same, because of its different electrochemical nature.

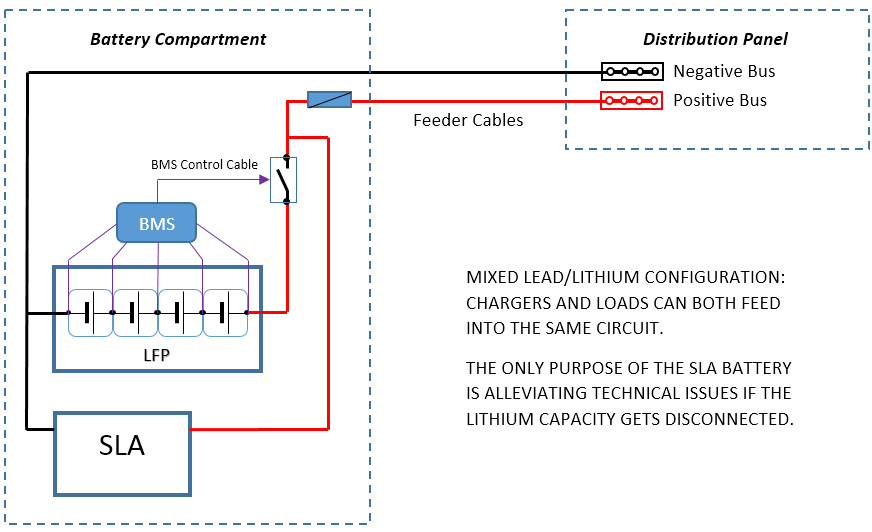

Alternative 1 – Lead-Lithium Hybrid Bank

The simplest way of resolving all the challenges mentioned at the beginning of this article is running the lithium bank in parallel with some standard lead-acid capacity. If any issue arises with cell voltages or temperatures, the lithium bank can be disconnected and the installation will revert to a simple lead-acid system. In some instances, this lead-acid capacity could get damaged or destroyed if the event that resulted in the disconnection of the lithium cells was severe, like an alternator regulation failure.

The simplest safe lithium installation: leaving a sealed lead-acid battery in parallel with the lithium bank at all times allows disconnecting the lithium capacity in case of problem without any issues. The additional SLA doesn’t contribute to any meaningful capacity; its function is ensuring charging sources always see a battery in circuit.

The practical result of such an arrangement is that the lithium battery ends up doing virtually all the work, because it is first to discharge due to its higher operating voltage. The charging voltages are no longer high enough to provide effective charging for the lead-acid cells, but as those are being trickle-charged above 13V all the time, they can be expected to remain essentially full and it hardly matters.

The lead-acid battery needs to be able to absorb whatever “unwanted” current may come its way if the lithium bank gets disconnected due to a high voltage event for example. In some instances, a single sealed lead-acid (SLA) battery can be sufficient. SLAs are the best choice for this application as they don’t consume water and are very inexpensive; gel cells should be avoided as they are costly and a lot more intolerant to overcharging and AGMs would be a complete waste of money in this role.

The drawbacks are:

- Some charge gets lost trickling continuously into the SLA, more so in a lead-acid battery in poor condition.

- It doesn’t fully eliminate the lead and associated weight.

- Removal of the SLA from the system, at some point in the future, would create an unexpected liability.

Some advantages are to be found as well:

- Disconnection of the lithium bank can be managed with a single contactor; there is no need to implement a split bus. This can allow using some small BMS solutions incapable of managing a dual DC bus.

- The lithium bank is literally added to the installation in place, normally without cabling alterations required, but not without voltage and regulation adjustments.

With this in mind, it certainly is the simplest fully functional design one can build, as long as protection and automatic disconnection are still very properly implemented for the lithium bank.

Should the lithium bank ever become heavily discharged, the additional lead-acid capacity can start contributing, but this would also leave it at a reduced state of charge for a time afterwards and cause it to start sulphating. This is not automatically much of a concern, because it may not happen (this depends on the BMS low-voltage disconnect threshold) and it doesn’t actually result in much harm if it does. The SLA needs to remain in a reasonable condition however, in order to be able to absorb any transients if the lithium bank gets dropped off due to excessive voltage and not continuously discharge the lithium cells at an excessive rate.

Voltage Sensing

NEVER, EVER, SENSE THE CHARGING VOLTAGE DIRECTLY AT THE LITHIUM BANK TERMINALS IN THIS CONFIGURATION

The sensing voltage required for charge control must be sourced upstream of the lithium battery disconnector, or in other words from the SLA battery, so it remains valid even after a disconnection of the lithium capacity. This is very important, otherwise uncontrolled, unlimited charging of the lead-acid battery will occur after the lithium capacity gets isolated.

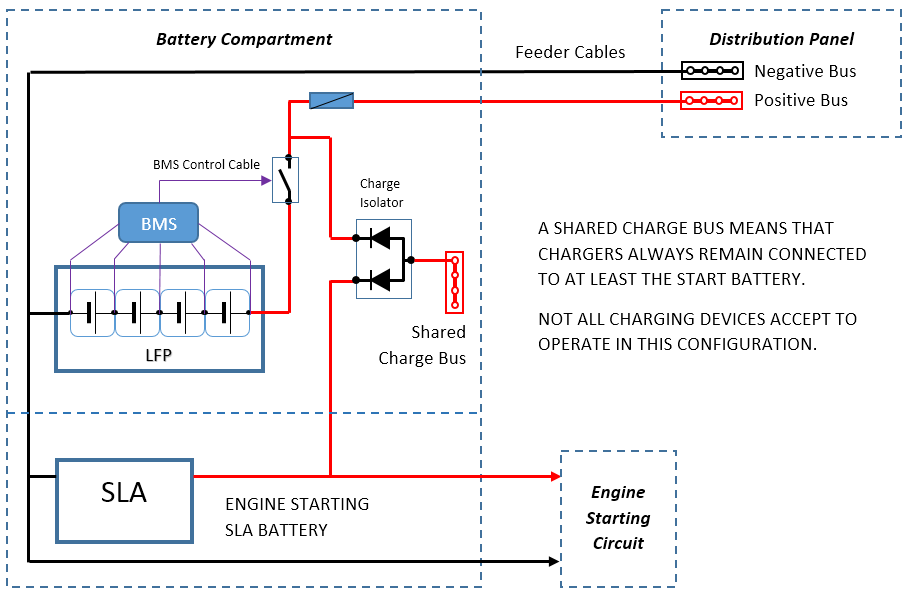

Alternative 2 – Split Charging

Considering that, in most instances, good system design practices lead to keeping a separate SLA battery for starting the engine, one can be tempted to derive similar benefits from it, instead of carrying one or more additional SLAs as required by the Lead-Lithium Hybrid topology.

Charge isolators are extremely useful devices for building lithium battery systems and can be found in a variety of configurations, 1 or 2 inputs connected to 2 or 3 outputs. They are extremely rugged and robust. The best ones all seem to be manufactured in the USA: Sure Power Industries, Hehr and Cole Hersee are all excellent sources for quality units. Inferior products generate considerably more heat.

If efficiency is a key concern, isolators using MOSFET transistors instead of diodes are available, albeit at significantly higher cost.

Using a charge isolator (also known as blocking or splitting diodes) can provide at least a partial solution, depending on the nature of the charging devices present. It is a good option with alternators and any chargers that don’t need a voltage originating from the battery to begin operating.

Since most of the electrical issues with the integration of lithium batteries in traditional marine systems arise with battery disconnection, splitting and sharing a common charge bus with the engine starting SLA battery is a very simple and effective way of addressing the matter.

Unfortunately, some battery charging devices refuse to operate behind an isolator; this prevents adopting this configuration as a universal solution, but it is nevertheless valuable.

Alternators and unregulated/crudely-regulated wind/tow generators are usually happy to function this way behind a diode. Internally-regulated generators commonly refuse to start unless they can “see” the battery voltage, because they require a small amount of power to first “release the brake”.

If this configuration can be achieved, then again the lithium bank can simply be dropped using a single disconnector without any ceremony, should some adverse event occur. One side-benefit is that the charging systems feed both into the lithium bank and the start battery, even though the voltage isn’t ideally quite high enough for the latter. This can be remediated by the addition of a small dedicated charger for the lead-acid battery, either solar or through step-up DC/DC conversion from the lithium bank.

Note that the charge bus still feeds into the positive bus after the lithium bank has been disconnected. The voltage from the charge bus is limited by regulation and the presence of the lead-acid battery, but the power quality may not be adequate with possible brown-outs. Also disconnecting the feeder line to the distribution panel in a battery protection event is one way of remediating this.

In such a configuration, it is very important that the lead-acid battery always remains present in the charging path. A battery switch to isolate the engine circuit is fine and desirable, but the charge isolator(s) should remain directly connected to that battery at all times to provide a pathway to dissipate any surge, as well as a nominal base load for the charge regulators.

Voltage Sensing with Charge Isolators

Any serious charge controller comes with a battery voltage sensing input. When the charger output is split to charge multiple banks, this becomes even more important as any losses over the charge isolator must be compensated for and a quandary always arises as to where to source the charging reference voltage.

Accurate battery voltage control is only going to be achieved for the battery being sensed, because there are voltage losses proportional to the current in charging systems. With a lithium bank in the system, sensing should reflect the voltage of the lithium bank and this will result in best performance for charging it; this is usually the desired outcome.

NEVER, EVER, SENSE THE CHARGING VOLTAGE DIRECTLY AT THE LITHIUM BANK TERMINALS IN THIS CONFIGURATION

Voltage sensing for a lithium battery in a split-charging topology must be performed at the output of the charge isolator, upstream of the battery disconnector, so disconnection of the battery doesn’t dissociate the sensed voltage from the charging voltage altogether: this would otherwise lead to uncontrolled, unlimited overcharging of the remaining lead-acid batteries in the system.

Voltage sensing can sometimes be performed at the input terminal of the charging isolator instead, for some equipment such as alternators typically. In this case, the charging voltage adjustment must be made for the lowest voltage drop that can be experienced over the isolator. This is normally about 0.3-0.4V for Schottky diode type units and essentially zero if a MOSFET-based isolator is used instead.

The difference in system performance is subtle and yields a less aggressive charging characteristics with lithium cells in particular.

General Electrical Installation

Fusing & Feeder Cables

A heavy-duty fuse should normally be found very close to the bank to protect the feeder cables. This fuse should be sized so it will never blow unless an intense short-circuit occurs, or it may create the potential for at least accidentally destroying the alternator, and often much more.

ANL fuses are cost-effective, easy to source and can offer interrupt ratings up to 6kA at 32V, but some are only good for 2kA.

The nominal current capacity of a fuse reflects the current it can conduct indefinitely without blowing. Currents above this value will cause the fuse to heat and eventually blow; the time it takes for this to happen is related to the ratio of the over-current and can range from minutes or more to milliseconds.

The interrupt rating of a fuse is considerably higher than its current capacity and defines how much current the fuse can successfully interrupt by blowing; values beyond this figure may result in continued arcing over the fuse after it has blown. The interrupt rating is very voltage dependent, for obvious reasons, and increases significantly at lower voltages.

Unless the feeder cable leaving the battery compartment is of an exceptional size and the battery bank is very large, a common low-voltage ANL fuse with an interrupt rating of 6kA at 32VDC is normally adequate. There is too much resistance in the cells, connections and cables to sustain the hypothetical currents (and associated apocalyptic predictions) that would supposedly arise from a short-circuit.

For a 13.3-volt source to supply in excess of 6000A, the total circuit resistance would need to be below 2.2 milliohms. Small lithium battery systems of interest for pleasure crafts normally fall short of such capability simply due to the size of the cabling used and number of bolted connections involved.

In the case of larger installations, a proper prospective fault current calculation should be carried out and the fusing should be selected to match the required interrupt rating.

Class T fuses offer much higher interrupt ratings (20kA) than the common ANL fuses and can become necessary to protect the feeder cables in large lithium battery bank installations.

The feeder cables should be sized according to the maximum acceptable voltage drop they can induce under normal operation. Quite often, alternator charging currents and inverter loads represent the maximums the installation can be expected to see.

Using unreasonably heavy cables or seeking negligible voltage drops at peak current also increases the maximum prospective short-circuit current the installation can produce and results in a higher level of risk. The cables need to be able to hold until the fuse blows and, until then, their resistance is precisely a good part of what limits the fault current: it pays to keep this in mind and take advantage of it.

Common Negative

In the case of a system with more than one battery bank – a very common configuration due to the presence of at least a starting battery – it is usually wise and sensible to tie all the negatives together, because it simplifies the integration of any device connected to more than one bank.

If charge splitting is to be used one way or another, then a common negative to these battery banks is mandatory.

Battery Sensing

Battery Voltage

If not already present, a dedicated battery voltage sensing cable with its own small fuse at the battery end should be run from the source of the sensing voltage, which often is not at the battery itself, to wherever the charging equipment is/will be located. All voltage sensing can then be consolidated onto a dedicated terminal block rather than having multiple wires all running back to the same location for an identical purpose.

A great deal of damage and destruction can result from sourcing the charging reference voltage inadequately in an installation with a lithium bank

Where the voltage sensing cable should be connected in the system depends on the topology of the installation and the subject was discussed on case-by-case basis earlier.

Battery Current

Many systems also include a current measurement shunt associated with a random number generator battery monitor or amp meter. The shunt is almost always found on the negative side, because it is technologically simpler and cheaper to measure the current there. Run a twisted pair cable from the shunt block directly to the measuring instrument.

Other than for the negative voltage sensing core and any BMS wiring, there should be nothing else than the lithium bank connected to the battery side of the shunt. This includes the negative of other batteries, such as a starting battery: failure to observe this will result in the current of the other batteries to also be measured, when it shouldn’t.

Temperature Sensors

Any battery temperature sensors associated with charge controllers and pre-existing lead-acid cells must be disconnected from all charge controllers and removed altogether. Some controllers may signal a fault as a result, but normally keep operating assuming a default constant battery temperature: this is exactly what we want. Occasionally, an ill-tempered controller may refuse to operate without its temperature sensor. Most temperature sensors are 2-wire negative temperature coefficient (NTC) thermistors (resistors whose value is temperature-dependent). Measure it at ambient temperature with a multimeter and replace it with an approximately equivalent fixed resistor (the nearest standard value will do) at the controller terminals.

This aspect is in fact part of the integration of lithium batteries with other equipment, but as the task of removing the sensors takes place within the battery compartment, it seemed logical to include it here.

Temperature sensors have their place in a lithium battery bank, but they are part of the battery protection circuitry and completely unrelated to the charging voltage. Lithium batteries in marine installations should always operate within a degree or two from ambient temperature, without exhibiting meaningful differences between cells.

Battery Switches

Simple heavy-current battery switches are a much better choice than combining switches with lithium batteries, as paralleling of batteries is usually most undesirable.

On dual DC bus systems, it is highly unadvisable to leave or install a battery master switch in the feed line between the batteries and the bus disconnectors. The correct way of achieving battery isolation is by opening both the charge and load bus disconnectors, which is a function that is normally provided by the BMS; failing to observe this point would again result in removing the battery while leaving both buses linked together as described earlier.

The only acceptable function for a manual battery isolator switch is turning the power off to the vessel, i.e. disconnecting the load bus.

If complete manual battery disconnection is desired, then either two single-pole battery switches or a 2-pole switch must be used to isolate both positive buses. Some analysis must be carried out to determine whether leaving the charging sources tied together at the “floating” charge bus with nothing to charge could result in equipment damage or not.

While the BMS may be able to provide “advanced notice” of a charge disconnect and turn the chargers off, a manual disconnect typically won’t.

Paralleling Switches

Paralleling batteries is a concept that evolved from trying to crank diesel engines with proverbially flat lead-acid batteries. One good engine starting battery is all it takes to do the job. Unless the engine is truly large, a single battery is normally ample, and more is just dead-weight.

If either the lithium or the lead-acid battery is heavily discharged, closing a parallel switch can initially result in an intense discharge current, with a risk going towards the cabling and the lead-acid battery due to the formation of explosive gases.

Systems including isolated banks of each type normally also include provisions for charging the lead-acid capacity properly (i.e. at higher voltages, using a temperature-compensated voltage and float-charging) and this makes the paralleling switch a very dubious proposition, because it exposes the lithium cells to a completely inadequate charging system. The fact that you “won’t leave the paralleling switch on” only means that it will happen anyway, sooner or later, because it can.

On a dual DC bus system, there is also the question of where to connect the switch: the tie-in can typically both consume and supply energy and it can only be cabled to either the charge or the load bus, leaving the system vulnerable to discharge through the charge bus, or overcharge through the load bus afterwards.

I personally prefer having the option of using jumper cables if ever warranted, rather than creating an unnecessary and permanent liability by having a paralleling switch in a dual DC bus installation.

Simple systems that don’t feature a dual DC bus can actually be designed with a paralleling switch, but it must join past the lithium bank disconnector relay, not on the battery side. This ensures that the BMS can break the parallel link if trouble is coming from there. Regardless, it is still a bad idea.

Voltage-Sensitive Relays (VSR)

Voltage Sensitive Relays (or VSRs) are always poor solutions in marine electrical systems and, at best, next to useless with lithium batteries. The one depicted above, with a cut-in voltage of 13.7V and a cut-out threshold of 12.8V, would essentially remain closed until deep discharge has occurred.

Voltage-sensitive relays are another plague of modern marine electrical systems. They gained ground after people experienced issues with diode-based charge isolators due to the voltage drop they induce and because VSRs are seemingly easier to deal with and understand.

Each battery bank has it own state of charge and needs in terms of charging profile. Paralleling banks together is never a great idea, even when the batteries are of the same type and require the same voltages.

Some VSRs sense the voltage on one side only, others on both; some offer adjustable thresholds and others not. Unless the unit is fully adjustable and includes both low and high voltage disconnection points, it is normally completely useless (and equally harmful) around lithium batteries.

Forwarding a charging voltage from a lithium bank to a lead-acid battery won’t result in a good charge characteristics. Doing the opposite requires observing both a connection and a disconnection voltage threshold, because lead-acid battery charging reaches excessive voltages. The resulting charge characteristics for the lithium battery is typically not good either, because no absorption time can be provided. It keeps getting worse: should one of the banks become heavily discharged, closing of the VSR can easily result in a sustained discharge current way beyond its current capacity, leading to some catastrophic failure.

On dual DC bus systems, VSRs normally bring all the same issues as paralleling switches: there is no correct place to wire them in and they have no place there.

Regardless of brand or type, VSRs never seem to lead to any good solutions in systems with both lithium and lead-acid cells. Fortunately, there seems to be an endless queue of ill-inspired people keen to buy them and this makes them very easy to get rid of.

The best answer to charging auxiliary engine starting SLA batteries is using a battery isolator, if an alternator is present, and DC/DC chargers from the lithium bank (or an auxiliary solar panel) to ensure full charge can be reached. The installation can then simply be configured to charge the lithium bank optimally.

Engine Starting Batteries

Internal combustion engines can be cranked with LiFePO4 batteries, very successfully at that, and even when the battery is low on charge, within reason: a lithium bank down to 3.0V/cell can struggle to crank a diesel. There are however a number of good reasons for not doing it when the vessel is large enough to sustain a dual bank installation:

- Redundancy and the ability to still start the motor with a discharged house bank are lost.

- Unless the lithium bank is huge and a current of some 100A means little, engine cranking still causes the voltage to sag at the battery and creates transients in the system.

- Lithium batteries are harder on engine glow plugs, because they supply a higher voltage under load.

Unless low weight is everything, using a lithium battery as a separate starting battery is possible, but usually not sensible:

- A SLA purely used as a starting battery is very easy to keep at full charge and commonly lasts 8 years or more on a marine vessel. A very small solar panel can be dedicated to floating that battery at the appropriate voltage if needed.

- The comparatively very high cost (and added complexity) of a lithium battery in this application cannot be justified.

- A lithium starting battery should be kept at about 50% SOC in order to age well; it introduces a new lithium charge control regime in the system.

- As highlighted earlier, there are often technical benefits to be found in still having a SLA in the system and dedicating one to cranking the engine is a good use for it.

Next Steps

Once the new battery bank has been balanced, assembled, protected and installed in an electrically correct configuration as described above, it needs to be integrated with existing charging equipment.

Due to the large variety of gear found on the market, with hardly any of it ever intended or properly designed to charge lithium batteries, chargers require a lot of attention in order to function without tripping the high voltage protection limit or overcharging the bank over time.

The subject is extensive enough to be treated separately.

This is a really good article. Thanks for the time you took to write it and I hope it helps a lot of cruisers!

Bob,

Thank you for your kind words, you have been in this field for quite a while… Late last year I saw a lithium battery fiasco of such a magnitude that it prompted me to start writing this material. At the time, the owner didn’t even understand how and why it had suddenly gone so wrong.

The electrical engineering component present in these systems is too often not identified properly or discounted, but it really is the backbone of the installation.

Best regards,

Eric

I built a device (micrcontroller/relay) that desconnects the generator field when 14 V has been reached.

The field is reconnected at 13.35 Volts.

I was thinking to reuse my lead-acid battery charger by controlling it through the temperature sensor input.

Instead of an NTC I would just use o fixed resistor that lowers the end of charge / float voltage.

Or even better measure the charge voltage by a microcontroller and adjust the resistance so that a float voltage of 13.3

Volts would result. The idea is just to prevent any further charging when 14 V battery voltage has been reached.

Have you or anyone tried this kind of approach ?

Hello Mike,

Good on you for engineering something. Lithium batteries need absorption like any other battery and disconnecting at 14.0V will produce very unsatisfactory results unless the charge current is very small. Charging will stop a long way short of the nominal capacity and serious problems will develop over time due to systematic lack of proper charging. The only fully correct charge termination condition is based on voltage and residual current, typically C/30.

You can certainly fool and control a charger to do something else than it was initially intended for. Using the temperature input is a thought as long as it doesn’t decide that the value has gone out of range. You need to test that with a potentiometer – which you might have done already. The other way is manipulating the signal at the voltage reference input. We have done that with alternators for a few years now. When the charger/regulator has multiple stages, the solution must be able to work with them. It is easiest and simplest when the charger provides a constant voltage.

Now, this is charge control. The bank still needs to be protected independently of that of course.

Best regards,

Eric

PS: I have an article about charging lithium batteries at draft stage. When I publish it, I might move your post there.

Thanks Eric.

Your articles on LiFePo4 are the best on the internet.

My primary concern is to not overcharge. I am not that concerned with getting the full charge, I just want to stay on the safe side. My Hitachi alternator gives out just 5 Amps(C/20) at 14 Volts, that is an approximate current based end of charge together with the 14 Volt limit. I can configure the voltage to a bit higher to get a lower current termination point.

My (other) PIC18 based controller also measures the current, so I could include the current in the algorithm.

Eventually the controller should control the charge current from solar, alternator, and charger.

And it should measure the cell voltages also.

My battery charger actually has a constant voltage output setting of 13.2 Volts for continuous Pb battery load carrying.

I will check how the temperature sensor input does control the output voltage with the above setting.

BR Mike

Mike,

Getting a proper charge, at least from time to time, is extremely important too with LiFePO4 cells, otherwise the voltage starts rising earlier and earlier over time and the available capacity starts shrinking. There is a memory effect taking place over time with partial cycles and incomplete recharge.

When you charge with low-power sources, the battery has more time to absorb the charge and the voltage doesn’t rise as quickly, so it mitigates the problem. Increasing the voltage above 14.0V makes the pack a lot less tolerant to small differences in cell balance and more difficult to charge unless you have good cell balancing circuitry. Cells always seem to drift apart a little over time.

I have seen cells completely destroyed even though the pack voltage had never gone over 14.0V due to severe cell balance issues. Once a cell starts getting stressed during charging, it gets damaged and everything falls apart. You can live without automatic cell rebalancing and make manual adjustments from time to time (after 2-4 years in my experience), but cell-level monitoring is the foundation of everything with lithium.

You should be able to find the output voltage feedback in the regulation circuit of your 13.2V PSU and alter that if needed.

Best regards,

Eric

Dear Eric,

It will be interesting to see how the cell balance will drift. My plan is to use a C/20 top balancing charger from time to time, maybe once per year. It is one of those made for the R/C market.

I also have a balancing board that could be connected permanently, but I want to see first how the cells behave. It will balance at any charge level, and that is maybe not a good idea.

I managed to get the WAECO MCA 1225 charger to charge to 14.0 Volts and float at 13.4 by connecting a 47.5 KOhm resistor as temperature compensation. The charging turns to floating at 14 V / 1.5 A(15 minutes) charging current. Then I just manually depower the charger, I will not keep it floating for a longer time.

Looks promising.

Maybe I should lower the float value to 13.30 in order to get some discharge from the battery. Not to keep it full all the time. This would drop the charge voltage a bit, to maybe 13.9, but with the current based EOC, it should not be a problem. 13.3 Volts would allow the charger to bear the load from the fridge and other in harbour equipment, while not further charging the battery.

Using the CV mode would require some support circuitry to stop floating the voltage at the higher level. I’ll skip that for the time being. The “temperature compensation” method looks good to me.

Best Regards,

Mike

Mike,

Cell balance adjustments in a top-balanced pack can only be performed when the cells are very close to full of course, and this means when the voltage is rising in the upper knee of the charge curve. The current must also be low so the voltage really reflects the state of charge, not the internal resistance of the cells. Attempting to rebalance the cells blindly each time the voltage is above a given value doesn’t work, unless the charger is very small.

Floating has no value at all in terms of charging, absorption is what matters, and then charging needs to stop. Configuring a “floating” voltage that is lower than the resting voltage of the cells is one way of causing the charge to terminate and then it can contribute to powering the loads and prevent the battery from discharging too far again as you say.

Best regards,

Eric

Dear Eric,

Just to continue on my previous message.

One problem with the EOC current is that if the fridge compressor starts every 10 minutes, and the charger expects the EOC condition to remain for 15 minutes, the EOC charge condition may never be reached.

So I guess that to really define the EOC condition, the current of the consumers should also be in the equation.

Best Regards,

Mike

Mike,

This issue primarily arises when the charger tries to determine the EOC condition by measuring its own output, which is only correct in the case of stand-alone charging. If you are charging into a system that is also powering loads, then it is the battery current, not the charger output current, that matters. A charger suitable for a system of the type we are interested in must use an external current shunt to measure the battery current only.

Obtaining a complete picture of the current flows (sources, loads and charging) requires two shunts and then the third value can be calculated of course.

When the fridge compressor runs, the charger should try to maintain voltage regulation at the absorption setpoint and the battery current shouldn’t change. The EOC condition only needs to last long enough to avoid false positives, like when throttling down an engine while the battery is in absorption. This is a matter of seconds, not minutes. The battery current value usually needs to be filtered too, so brief fluctuations don’t cause an early termination.

Best regards,

Eric

Eric,

First, I have enjoyed reading all your write ups on your experiences with LFP battery configurations.

Some background. I have been cruising for 22 years. Getting old so transitioning to a 44’ power catamaran. I experimented with drop-in LFPs in my last boat and was quite impressed. I am knowledgeable about wiring and rewired much of my last boat over the 15 years I owned her.

After 6 months of reading everything I could get my hands on concerning installing LFPs and the issues with charging sources, high voltage and low voltage disconnects, etc., I came to the conclusion that the system needed some way to deal with the lack of a battery should a HVD or LVD event occur. Looking at the charge profile of the various lead-acid batteries, it seemed to me that a SLA-FLP combination might work. Looking around the internet I found 2 discussions of this approach and one of them is your article here. Apparently, you agree that such a hybrid system could deal with the, hopefully, rare LVD event. In addition, it seems that you agree that it could also handle a less rare HVD event where solar, charger, or alternator try to overcharge the FLP pack.

Are you aware of anyone actually implementing this approach? How has it worked out for them? It seems to me that a second (failsafe) HVD (and LVD?) would be appropriate in this design to guard against a failure of the primary battery protection device.

The new boat is wired for four battery banks. 1 each for the engine start batteries. 1 for the generator start battery. And 1 for the house battery. Catamarans are of course weight sensitive and this is crazy. I intend to get this down to 2 banks as I did with my previous sailing cat. One starting battery for the stbd engine and generator. One bank, a hybrid 800 AH FLP/200AH SLA, for starting the port side engine as well as for the house bank. With emergency crossovers of course.

My expectation is that by keeping the SLA in parallel with the FLP bank I will not have to redesign the alternators or their control circuits. In addition, though I intend to modify the inverter/charger profile and the solar charger profile to FLP compatible values, I will not have to be concerned should something fail and try to over charge or over discharge the FLP bank.

I would be very interested in your feedback. Although there is much written about LFP batteries, most is EV, or RV related. There is not much marine related experience, and this definitely seems to be a marine only solution.. The other discussion about an approach such as this seems to be a dead thread.

Apparently your BMS is not ready for production. I am currently considering the Orion Jr. Do you have recommendations?

Thanks

Bryan

Bryan,

Lithium batteries will deliver the same performance regardless of how they have been packaged in these applications. The difference will be in durability. The disappearance of the battery from the system is the key critical issue with drop-ins of course, because they disconnect without any advanced warning. The only practical way of dealing with this is creating a hybrid bank indeed, with some “permanent” lead-acid capacity.

However, the capacity of the lead-acid isn’t truly additive, because once you configure the installation to charge the lithium cells sensibly, you also lack the voltage to properly recharge the lead-acid cells if they have been cycled. As a full lead-acid stands at 12.8V and a lithium bank at the same voltage is around 13% SOC, it is quite straightforward to see that the lithium is going to be doing all the work up to that point (which is nearly all the time).

The fact that the battery is packaged (usually with a lot of marketing first and foremost) only gives you protection against a battery fire and nothing else. It doesn’t change the facts that:

– The cells will get damaged if they go into over-voltage territory.

– The cells will also get damaged if they are getting chronically over-charged (which can’t be controlled with voltage). You still need to provide charge termination.

– The cells can and will go out of balance and unbalanced cells will go over-voltage at the end of every charge cycle.

– The only avenue you have to rebalance is going over-voltage periodically and if the imbalance becomes severe enough, it will cause a disconnect.

– If you happen to connect two packs in series to make 24V, then the packs have no way of balancing unless you drive a whole battery over-voltage.

Creating a hybrid bank only solves the disconnect problem. You still have to limit the maximum charging voltage to 14.0V, get rid of temperature compensation and provide charge termination when full / prevent recharging into a full battery. This last point is always the most challenging as lead-acid charge controllers are designed to keep a battery full and trickle charge it if needed. It is the case with any kind of lithium system, but a decent BMS can help you with this. With packaged lithium cells, you are usually flying blind.

I know of one installation that has been operating for a few years with some lead-acid capacity in parallel with packaged LFP cells and an adjusted charging voltage, but the owner cycles it daily and supervises charging manually. It turns into a headache with no really acceptable solution when he wants to leave the boat for a while without turning everything off.

Emergency cross-overs with lithium create a hazard as they basically connect a lithium battery to a charging system that is not suitable for it and the cross-over can be left on inadvertently. People swear that they won’t allow that to happen, but it makes no difference from the angle of liability, insurability etc. It is not something to have around. Use a jumper cable if you ever have to.

An inverter/charger can work for you, but only because you won’t be able to create a dual DC bus system.

A proper lithium-ion BMS is not an option for you if you start with packaged batteries, because you don’t have access to the cell voltages and your capacity is fragmented across a group of “12V strings”, instead of “3.3V blocks”. A BMS is all about individual cell voltages and current. When you buy a “drop-in” packaged battery, you typically buy a bunch of small cylindrical cells (because they are cheaper than prismatics) and a low-cost “disposable” BMS with every single battery. It makes little technical or economical sense to try and assemble a large capacity bank this way. If you are thinking about a BMS, then you should be buying bare cells and build your own bank.

My time got diverted by other work for quite a while, but I have been back on the new BMS project for the last few weeks with a view of finally bringing it over the line. The next iteration of the hardware will hopefully be the final one and only a little more effort is needed on the software.

Best regards,

Eric

Eric,

Thanks for the response, although I apparently was not clear enough. I will try to correct that here.

While I have tried drop-in LFP in the past, my current project is intended to use individual cells in a 800Ah pack with a BMS to control LVD and HVD events. On my previous boat I only had 2 battery banks (after my modifications), one small starting battery shared by 2 main engines and the generator and the house bank which for the last year was 2 SMART LFP drop-in batteries in 300Ah size. I only had 3 months of true cruising experience with the drop-ins, and even though I changed nothing on my solar, shore power or alternator charging systems I did not experience any power loss from disconnect situations. If these batteries were disconnecting due to high voltage during charging, it never became apparent. In that installation I only had a very small starting battery as my second bank isolated from the house bank by an ACR. Thus it is clear that I never saw a LVD event and if a HVD ever occurred it was masked by the small starter battery and the HVD re-connected after the charging current died before the small starter battery was impacted.

My goal was to avoid a major re-wire of the new boat upon arrival while still getting the benefit of a LFP house bank. That combined with the inherent issues of dealing with two engine alternators, and a new Victron Multi 3000 on an all 12V boat led me to look at alternatives that would still allow me to install a reasonably sized LFP house bank. The solar installation will be done after boat arrival as well as the LFP bank. The boat comes stock with 3 200 AH starting batteries and 4 200 AH house batteries. The house bank is too small and the boat has way more starting capability than it needs. So, I was intending to delete the generator starting battery by sharing the starboard engine starting battery. In addition, I would delete all the house batteries and use the Port starting battery as a “front end” to the new FLP house bank and wire it as both house and port starting battery. Thus the emergency start cross over capability should either bank be dead. In 15 years with the last catamaran, I never had to use the emergency start crossover so I don’t worry much about accidentally leaving it on.

I agree with your assessment that the SLA is not “additive” to the house bank in the normal sense. In this case, the battery needed to exist anyway to serve as the starting battery for the port engine so nothing is lost if it does double duty as the “bottom end” of the house bank. However, it seems to be just the ticket to allow for the fact that current generation charging sources are not smart enough to deal with the FLP batteries. If controlled by a BMS that can actually determine SOC based on Voltage and charge rate vs capacity, an HVD could disconnect the FLP bank and allow the SLA to deal with the fact that the various charge sources are designed for lead-acid characteristics. This would also allow the SLA battery to get the charge it needs to stay healthy by staying connected during the HVD and absorbing the normal SLA charge cycle. Even with a BMS that is voltage driven only, a little hysteresis, say dis-connect at 14.0 to 14.2 and connect at 13.2 to 13.4, should keep the system from cycling too much while still protecting the FLP bank.

When the FLP bank disconnects from LV, one would hope that the SLA battery still has enough capacity to start the engine. This would certainly dictate a reasonable LVD voltage set point. In addition, the loads that depleted the house bank need to be disconnected so as to not continue to deplete the starting battery (LVD disables the inverter output and most house loads). However, if this battery can stay connected to the solar controller, the LFP BMS and the engine system as well as the inverter/charger (with the inverter hopefully disabled), then charging can resume at any time there is a source and the BMS can re-connect the LFP accordingly.

I am currently proceeding on the assumption that this type of system would work. If the parallel system you are aware of is working as a manual system, then this should be able to work as an automated version of that. One of my concerns is that the BMS becomes critical to the health of the system. I am currently looking for a way to provide a fail safe, e.g. a voltage sensitive relay, for the high voltage side which I believe will be the more “active” case.

All of the above clarifies my interest in your proposed BMS and the other option I mentioned, the Orion JR. I believe this approach can “fix” all of the problems of disconnecting and re-connecting the LFP battery bank without the expense and labor of re-wiring the boat, adding external alternator controllers and dual alternator controllers, special solar controllers, separate chargers and inverters, etc. While the system may get modified over time, it makes more sense to me than replacing a lot of brand new equipment.

Bryan

Bryan,

Yes, I didn’t realise that – this time – you were not intending to use drop-ins again. The reason why they “worked” last time without changing anything else is because they have HVD levels set ridiculously high precisely to prevent them from disconnecting when installed in a lead-acid system. This allows the cells to get ruined over a period of time in order to secure the sales and, in most cases, by the time the battery is destroyed, it will hopefully also be out of warranty.

The situation with bare cells and a BMS is not much different in terms of impact on the existing installation however.

First of all, the battery disconnection scheme is a safety system that should never operate, not a way of controlling charging. Relying on HVD to terminate the charge of the lithium would be both bad design and poor in terms of performance, because the lithium would get no absorption time and this can represent around 30 minutes when charging with a lot of current, like what you get when running two engines and alternators in parallel. The consequence of this is that you still have to control your voltage properly, from all sources. This can require changes and investment.

If you use a proper lithium BMS and don’t built a dual DC bus installation, then a HVD means loss of power everywhere and the system can’t recover from a LVD, because it also loses the ability to recharge. This is due to the single disconnect point. This is generally undesirable on a boat. Leaving a lead-acid battery in the system at all times resolves the disconnect issue as discussed in this article and also leaves you with some power after a disconnect, so it mitigates the problem to some extent.

If you build a dual DC bus system instead, then you must separate the loads from the charging sources. This means some rewiring, but not rewiring “the whole boat”. In a dual DC bus system, you can also keep a lead-acid battery hanging off the charge bus to alleviate the HVD event. Or you can use a starting bank on the other side of an isolator as I describe, at least with some of the charging sources. This is where an electrical engineering component creeps in.

However, once you build a dual DC bus system, you are NOT allowed to draw current from the charge bus or charge into the load bus. This means that you have no proper place to connect a combined inverter/charger and you need to choose between the inverter or the charger and not try to fudge it.

On the same token, if you never used the cross-over switch in 15 years on your previous boat, the correct conclusion is that you don’t need it, not that building a hazard into the system is ok.

If you leave a lead-acid battery in the system after a LVD, it won’t do you any good in terms of capacity. Using a high LVD on lithium would be idiotic because you then deliberately deprive yourself of the deep-cycling ability of lithium for no gain: you would be left with a smaller lead-acid battery that will run flat before the lithium bank would really get low. LVD for a lithium house bank is typically 2.8V/cell with a warning alarm at 3.0V/cell. By then, you would have drawn your lead-acid battery down to 11.2V only and it wouldn’t be of much use to start an engine. If you want that battery for cranking, then it must be isolated like I describe so it doesn’t discharge with the lithium. A hybrid bank only resolves disconnect issues. There isn’t really anything else to be gained or obtained from it.

So the answer to your question is that there is no quick fix or shortcut with a dual DC bus system. If you don’t build a dual DC bus system, then you can simplify things at the cost of functionality/resilience by using a hybrid lithium/SLA scheme, but it is just going to behave like a single battery of course. It can’t be two banks while also being one. Even so, it still isn’t a quick fix.

The reason why the manual system has been working is because it has been managed manually AND all the charging voltages were adjusted. The owner has to manage it this way because it can’t be automated: he used drop-ins and the BMS is of no help. With a proper BMS, there are additional things you can do, but implementing charge termination means creating a way for the BMS to get the charge controllers to cut out and this is also a little engineering project. Regardless of what you do, once you are charging lithium cells you have to make all your charging voltages and charging profiles acceptable for the lithium. Battery charging requires voltage regulation, you can’t do it by pulling the plug.

Sometimes you can modify or trick the equipment in place to make it work more like what you need to achieve, and sometimes you have to replace it.

If you chronically fail to recharge LFP batteries properly, they develop major memory effects and you can end up with hardly any usable capacity after as little as 3 years.

The bottom line with lithium is always that the job needs to be done properly, or:

1) It will be intrinsically unsafe

2) Your investment won’t last

3) Performance will degrade early

A lot of DIY systems are unsafe and/or contain hidden flaws capable of causing very extensive damage. A number of commercials have sold very high-cost safe solutions into the high-end market and got burned due to premature failures. However, it is possible to build safe and long-lasting systems and this is the topic of these articles.

Eric

Hello Eric,

Thanks very much for making this information available. It is a lot of work to document a system in a clear manner so it is useful to others. Your time is much appreciated.

I’m just designig my system now and was curious about the base in the photo of the Tyco relays. Did you make that yourself? If so, what did you use?

Thanks very much,

Bruce

s/v Migration

Hello Bruce,

Thank you for writing.

I made the base board for the Tyco relays myself indeed. The material is known as Nylatron, it is very tough and a good insulator. It is sometimes used in electrical switchyards. You don’t have to use this, but be extremely wary of the connections becoming loose over time, especially if the plastic yields. I strongly recommend having nuts below as well as above the terminals, so you don’t rely on the pressure through the base board to keep the connections tight. Some people have damaged the relays from heat because the construction wasn’t quite good enough.

Best regards,

Eric

Eric,

I am currently engineering a system for my 46’ Sailing Cat for worldwide cruising. (So far my only purchase is a quality benchtop power supply) I have found that a sound investment at the beginning of a project saves a lot of money by the end. I have spent a lot of time reading your articles as well as many others but still long way to go. A few questions about this article:

I feel that a dual bus system is definitely the way to go. In your split charging schematic, you show a single disconnect between the isolator and the lithium bank. I would assume that this is for a HVD only which would allow the charge side to maintain some power to the house with the SLA essentially being the control for the chargers. I understand that this is a safety and should never actually happen if the charging is set up correctly, however, Murphy tells us that components fail at the worst possible times. Would the LVD then be prior to the load bus but still allow the charge source to recover the lithium bank? Did I miss something?

After you mentioned memory, I read a couple of research papers on it an am left with some confusion. While it appears that the accepted school of thought is that memory is not a problem, what has been your experience to alleviate this issue?

Finally, many people seem to like the Clean Power Auto BMS but they no longer sell to the DIY market. There are so many BMS options out there, I am scared of ending up with a junk BMS but I also don’t want to get something that has more features than what I really need. Paying high dollar does not always equate to high quality. And being in the middle of the South Pacific is no time to end up with no battery bank. Any advice on a good quality, simple BMS? I plan on manually balancing periodically.

Thanks in advance for any insight and for your very informative articles.

Scott,

Schematics showing a single disconnect are NOT for a dual DC bus system. Once you disconnect the lithium bank, it can no longer charge or discharge, so by extension it can’t recharge and recover from a LVD event. Whether you also lose power to the on-board loads depends on the configuration and whether there is charging current available or not. Studying the schematics answers these questions. The SLA is there to leave a load for the chargers and there are a few variations possible in this direction.

A dual DC bus system is the solution of choice for an ocean cruising boat, but it tends to require more analysis and engineering to address all issues. If you feed the charge bus from an isolator on a dual DC bus system, then you can divert the current to another battery in the event of a disconnect. Great concept, but some chargers refuse to work when wired this way, because they see no battery voltage… Every lithium system tends to be a small engineering project because of differences in the equipment involved in it. Sometimes you need to test some of these things to discover what you can and can’t do.

The system needs to be robust no matter what happens. Anything that can happen will sooner or later happen. HV disconnects do happen. All it takes is a poorly regulated charging source, like many wind generators are, a lead-acid charge controller doing something stupid or just poor voltage sensing somewhere. I have seen a bank tripping because there was a problem with a cell link connection: it was tight, and yet not good. Battery systems are often also high-current systems and they can be unforgiving.

The BMS you are referring to was a little solution designed to help people playing with EVs, which is a simple application where you either have a charger and no load, or a load and no charger. Fine. Some people started installing it on boats and it caused a remarkable amount of destruction due to the single disconnect point that left the chargers connected directly into the loads with no battery to help with voltage regulation. Most of the systems built with it are not fail-safe and/or plain unsafe. I have seen entire electrical systems fried because it had simply tripped. It should be pulled out.

While you do not always get what you pay for indeed, you don’t tend to get more than what you pay for either. I believe there a few usable BMS units out there, but nothing I am completely pleased with. So I build mine when I need one. One of these days I might manufacture a batch of interesting BMS units, but there is one unusual feature I want to include before that. A BMS should look after cell balance and should not require unusually high cell voltages to do so.

Capacity reduction from memory effect following repeated partial charges is a fact. It happens and it is visible. If you keep at it for long enough, you end up with severely reduced capacity. If the system is set up to charge properly, my experience is that the battery gradually recovers over a number of full charge cycles. If the end-of-charge voltage is insufficient, it can’t, the situation keeps getting worse and you end up with hardly any usable capacity. We are slowly coming out of winter here and my bank has seen well over a hundred partial cycles without full recharge. It charged to the termination point a few days ago and the same evening the voltage was already below 13.2V. That is memory effect: around 40% capacity unreachable because the voltage rises abnormally early in the charge cycle. It does recover as long as you don’t listen to the conservative-over-conservative charging “recommendations” forever repeated around. Lithium iron phosphate batteries need to be charged properly up to a termination point: “weak charging” is not a substitute for charge termination.

If you are in a situation where you recharge to full easily and relatively frequently with enough voltage, then memory effect is essentially non-existent. Lithium batteries need absorption just like any other battery.

Last, but not least, you need to understand what you are doing and why you are doing it when you build a lithium system. I believe you can always get there if you put enough time and effort into studying the matter. You need to perform a what-if analysis on your schematic before buying or building anything. The objective of these articles is supporting this process.

All the best,

Eric

Thank you for this series, it has been an absolute delight to absorb these articles.

It seems nearly impossible to find information this condensed and accurate on the subject. Many sources seem uninformed at best and dangerous at worst. Can’t wait for the article on charging, as it is the final pain point I’m struggling to get right, before building my own setup.

You rock.

Niko

Thanks Niko. I will try to get the article on charging out and finish the series. Finding the time to finish the draft while also progressing the BMS is a challenge…

All the best,

Eric

Hi Eric,

Many thanks for a very informative and well written series of articles on LiFePo4 installations on boats, an awful lot of information presented in a very understandable way, especially helpful is the “failure mode analysis” that covers many things that often get overlooked when designing a system. Most systems get designed around the concept of “how will it work” when they really should be designed around the concept of “how will it fail” – this tends to produce a much more robust system.

I am currently designing and planning a LiFePo4 installation on board our 42ft cat as well as a system for a 50ft cat, both systems will be installed over the coming winter and your articles have been very useful. Both systems will use Winston prismatic cells with 400AH at 12V on our boat and 400AH at 24V on the 50ft. You suggest that 200AH is enough for most boats but boats are generally more power hungry these days and both these boats are full-time liveaboards so the extra capacity is justified since the aim is to be able to run off-grid for extended time periods that requires powering fridge, freezer, large capacity watermaker and many other consumers. The larger capacity will allow for 2 or 3 days without sunshine if needed. Main charge source for both boats will be solar with alternators and mains chargers as additional sources.